1. Introduction: nilearn in a nutshell¶

Contents

1.1. What is nilearn: MVPA, decoding, predictive models, functional connectivity¶

Why use nilearn?

Nilearn makes it easy to use many advanced machine learning, pattern recognition and multivariate statistical techniques on neuroimaging data for applications such as MVPA (Mutli-Voxel Pattern Analysis), decoding, predictive modelling, functional connectivity, brain parcellations, connectomes.

Nilearn can readily be used on task fMRI, resting-state, or VBM data.

For a machine-learning expert, the value of nilearn can be seen as domain-specific feature engineering construction, that is, shaping neuroimaging data into a feature matrix well suited to statistical learning, or vice versa.

1.1.1. Why is machine learning relevant to NeuroImaging? A few examples!¶

| Diagnosis and prognosis: | |

|---|---|

Predicting a clinical score or even treatment response from brain imaging with supervised learning e.g. [Mourao-Miranda 2012] |

|

| Measuring generalization scores: | |

|

|

| High-dimensional multivariate statistics: | |

From a statistical point of view, machine learning implements statistical estimation of models with a large number of parameters. Tricks pulled in machine learning (e.g. regularization) can make this estimation possible despite the usually small number of observations in the neuroimaging domain [Varoquaux 2012]. This usage of machine learning requires some understanding of the models. |

|

| Data mining / exploration: | |

Data-driven exploration of brain images. This includes the extraction of the major brain networks from resting-state data (“resting-state networks”) as well as the discovery of connectionally coherent functional modules (“connectivity-based parcellation”). For example, Extracting resting-state networks with ICA or Parcellating the brain in regions with clustering. |

|

1.1.2. Glossary: machine learning vocabulary¶

| Supervised learning: | |

|---|---|

Supervised learning is interested in predicting an output variable, or target, y, from data X. Typically, we start from labeled data (the training set). We need to know the y for each instance of X in order to train the model. Once learned, this model is then applied to new unlabeled data (the test set) to predict the labels (although we actually know them). There are essentially two possible goals:

In neuroimaging research, supervised learning is typically used to derive an underlying cognitive process (e.g., emotional versus non-emotional theory of mind), a behavioral variable (e.g., reaction time or IQ), or diagnosis status (e.g., schizophrenia versus healthy) from brain images. |

|

| Unsupervised learning: | |

Unsupervised learning is concerned with data X without any labels. It analyzes the structure of a dataset to find coherent underlying structure, for instance using clustering, or to extract latent factors, for instance using independent components analysis (ICA). In neuroimaging research, it is typically used to create functional and anatomical brain atlases by clustering based on connectivity or to extract the main brain networks from resting-state correlations. An important option of future research will be the identification of potential neurobiological subgroups in psychiatric and neurobiological disorders. |

|

1.2. Installing nilearn¶

- Windows

- Mac

- Linux

- Get source

-

First: download and install 64 bit Anaconda

We recommend that you install a complete scientific Python distribution like 64 bit Anaconda . Since it meets all the requirements of nilearn, it will save you time and trouble. You could also check PythonXY as an alternative.

Nilearn requires a Python installation and the following dependencies: ipython, scikit-learn, matplotlib and nibabel

Second: open a Command Prompt

(Press "Win-R", type "cmd" and press "Enter". This will open the program cmd.exe, which is the command prompt)

Then type the following line and press "Enter"pip install -U --user nilearn

Third: open IPython

(You can open it by writing "ipython" in the command prompt and pressing "Enter")

Then type in the following line and press "Enter":In [1]: import nilearn

If no error occurs, you have installed nilearn correctly.

-

First: download and install 64 bit Anaconda

We recommend that you install a complete scientific Python distribution like 64 bit Anaconda. Since it meets all the requirements of nilearn, it will save you time and trouble.

Nilearn requires a Python installation and the following dependencies: ipython, scikit-learn, matplotlib and nibabel

Second: open a Terminal

(Navigate to /Applications/Utilities and double-click on Terminal)

Then type the following line and press "Enter"pip install -U --user nilearn

Third: open IPython

(You can open it by writing "ipython" in the terminal and pressing "Enter")

Then type in the following line and press "Enter":In [1]: import nilearn

If no error occurs, you have installed nilearn correctly.

-

If you are Ubuntu or Debian and you have access to Neurodebian or can install it. Then simply install nilearn through Neurodebian.

First: Install dependencies

Install or ask your system administrator to install the following packages using the distribution package manager: ipython , scikit-learn (sometimes called sklearn, or python-sklearn), matplotlib (sometimes called python-matplotlib) and nibabel (sometimes called python-nibabel)

If you do not have access to the package manager we recommend that you install a complete scientific Python distribution like 64 bit Anaconda. Since it meets all the requirements of nilearn, it will save you time and trouble..

Second: open a Terminal

(Press ctrl+alt+t and a Terminal console will pop up)

Then type the following line and press "Enter"pip install -U --user nilearn

Third: open IPython

(You can open it by writing "ipython" in the terminal and pressing "Enter")

Then type in the following line and press "Enter":In [1]: import nilearn

If no error occurs, you have installed nilearn correctly.

-

To Install the development version:

Use git as an alternative to using pip, to get the latest nilearn version

Simply run the following command (as a shell command, not a Python command):

git clone https://github.com/nilearn/nilearn.git

In the future, you can readily update your copy of nilearn by executing “git pull” in the nilearn root directory (as a shell command).

If you really do not want to use git, you may still download the latest development snapshot from the following link (unziping required): https://github.com/nilearn/nilearn/archive/master.zip

Install in the nilearn directory created by the previous steps, run (again, as a shell command):

python setup.py install --user

Now to test everything is set up correctly, open IPython and type in the following line:

In [1]: import nilearn

If no error occurs, you have installed nilearn correctly.

1.3. Python for NeuroImaging, a quick start¶

If you don’t know Python, Don’t panic. Python is easy. It is important to realize that most things you will do in nilearn require only a few or a few dozen lines of Python code. Here, we give the basics to help you get started. For a very quick start into the programming language, you can learn it online. For a full-blown introduction to using Python for science, see the scipy lecture notes.

We will be using IPython, which provides an interactive scientific environment that facilitates (e.g., interactive debugging) and improves (e.g., printing of large matrices) many everyday data-manipulation steps. Start it by typing:

ipython --matplotlib

This will open an interactive prompt:

IPython ?.?.? -- An enhanced Interactive Python.

? -> Introduction and overview of IPython's features.

%quickref -> Quick reference.

help -> Python's own help system.

object? -> Details about 'object', use 'object??' for extra details.

In [1]: 1 + 2 * 3

Out[1]: 7

Note

The --matplotlib flag, which configures matplotlib for

interactive use inside IPython, is available for IPython versions

from 1.0 onwards. If you are using versions older than this,

e.g. 0.13, you can use the --pylab flag instead.

>>> Prompt

Below we’ll be using >>> to indicate input lines. If you wish to copy and paste these input lines directly into IPython, click on the >>> located at the top right of the code block to toggle these prompt signs

1.3.1. Your first steps with nilearn¶

First things first, nilearn does not have a graphical user interface. But you will soon realize that you don’t really need one. It is typically used interactively in IPython or in an automated way by Python code. Most importantly, nilearn functions that process neuroimaging data accept either a filename (i.e., a string variable) or a NiftiImage object. We call the latter “niimg-like”.

Suppose for instance that you have a Tmap image saved in the Nifti file “t_map000.nii” in the directory “/home/user”. To visualize that image, you will first have to import the plotting functionality by:

>>> from nilearn import plotting

Then you can call the function that creates a “glass brain” by giving it the file name:

>>> plotting.plot_glass_brain("/home/user/t_map000.nii")

Note

There are many other plotting functions. Take your time to have a look at the different options.

For simple functions/operations on images, many functions exist, such as in

the nilearn.image module for image manipulation, e.g.

image.smooth_img for smoothing:

>>> from nilearn import image

>>> smoothed_img = image.smooth_img("/home/user/t_map000.nii", fwhm=5)

The returned value smoothed_img is a NiftiImage object. It can either be passed to other nilearn functions operating on niimgs (neuroimaging images) or saved to disk with:

>>> smoothed_img.to_filename("/home/user/t_map000_smoothed.nii")

Finally, nilearn deals with Nifti images that come in two flavors: 3D

images, which represent a brain volume, and 4D images, which represent a

series of brain volumes. To extract the n-th 3D image from a 4D image, you can

use the image.index_img function (keep in mind that array indexing

always starts at 0 in the Python language):

>>> first_volume = image.index_img("/home/user/fmri_volumes.nii", 0)

To loop over each individual volume of a 4D image, use image.iter_img:

>>> for volume in image.iter_img("/home/user/fmri_volumes.nii"):

... smoothed_img = image.smooth_img(volume, fwhm=5)

Exercise: varying the amount of smoothing

Want to sharpen your skills with nilearn?

Compute the mean EPI for first subject of the ADHD

dataset downloaded with nilearn.datasets.fetch_adhd and

smooth it with an FWHM varying from 0mm to 20mm in increments of 5mm

Hints:

- Inspect the ‘.keys()’ of the object returned by

nilearn.datasets.fetch_adhd- Look at the “reference” section of the documentation: there is a function to compute the mean of a 4D image

- To perform a for loop in Python, you can use the “range” function

- The solution can be found here

Now, if you want out-of-the-box methods to process neuroimaging data, jump directly to the section you need:

1.3.2. Scientific computing with Python¶

In case you plan to become a casual nilearn user, note that you will not need to deal with number and array manipulation directly in Python. However, if you plan to go beyond that, here are a few pointers.

1.3.2.1. Basic numerics¶

| Numerical arrays: | |||

|---|---|---|---|

The numerical data (e.g. matrices) are stored in numpy arrays: >>> import numpy as np

>>> t = np.linspace(1, 10, 2000) # 2000 points between 1 and 10

>>> t

array([ 1. , 1.00450225, 1.0090045 , ..., 9.9909955 ,

9.99549775, 10. ])

>>> t / 2

array([ 0.5 , 0.50225113, 0.50450225, ..., 4.99549775,

4.99774887, 5. ])

>>> np.cos(t) # Operations on arrays are defined in the numpy module

array([ 0.54030231, 0.53650833, 0.53270348, ..., -0.84393609,

-0.84151234, -0.83907153])

>>> t[:3] # In Python indexing is done with [] and starts at zero

array([ 1. , 1.00450225, 1.0090045 ])

|

|||

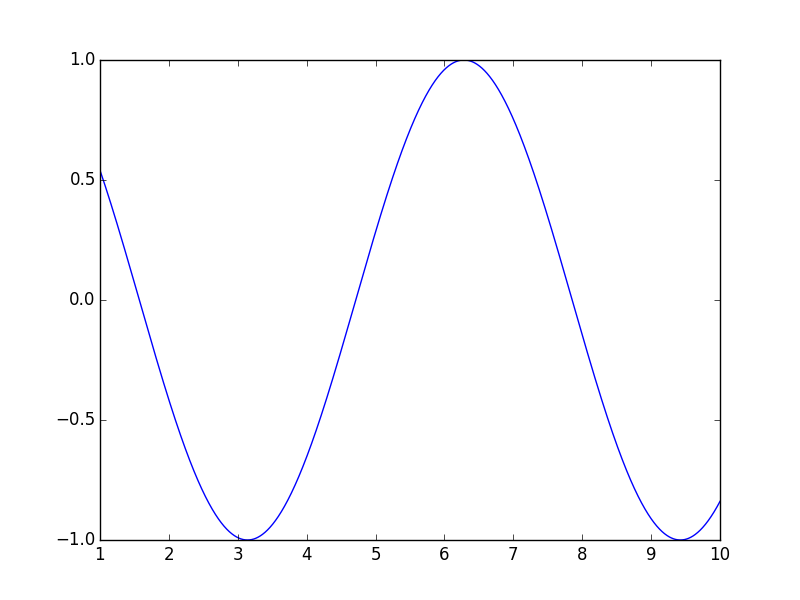

| Plotting and figures: | |||

>>> import matplotlib.pyplot as plt

>>> plt.plot(t, np.cos(t))

[<matplotlib.lines.Line2D object at ...>]

|

|||

| Image processing: | |||

>>> from scipy import ndimage

>>> t_smooth = ndimage.gaussian_filter(t, sigma=2)

|

|||

| Signal processing: | |||

>>> from scipy import signal

>>> t_detrended = signal.detrend(t)

|

|||

| Much more: |

|

||

1.3.2.2. Scikit-learn: machine learning in Python¶

What is scikit-learn?

Scikit-learn is a Python library for machine learning. Its strong points are:

- Easy to use and well documented

- Computationally efficient

- Provides a wide variety of standard machine learning methods for non-experts

The core concept in scikit-learn is the estimator object, for instance an SVC (support vector classifier). It is first created with the relevant parameters:

>>> from sklearn.svm import SVC

>>> svc = SVC(kernel='linear', C=1.)

These parameters are detailed in the documentation of the object: in IPython you can do:

In [3]: SVC?

...

Parameters

----------

C : float or None, optional (default=None)

Penalty parameter C of the error term. If None then C is set

to n_samples.

kernel : string, optional (default='rbf')

Specifies the kernel type to be used in the algorithm.

It must be one of 'linear', 'poly', 'rbf', 'sigmoid', 'precomputed'.

If none is given, 'rbf' will be used.

...

Once the object is created, you can fit it on data. For instance, here we use a hand-written digits dataset, which comes with scikit-learn:

>>> from sklearn import datasets

>>> digits = datasets.load_digits()

>>> data = digits.data

>>> labels = digits.target

Let’s use all but the last 10 samples to train the SVC:

>>> svc.fit(data[:-10], labels[:-10])

SVC(C=1.0, ...)

and try predicting the labels on the left-out data:

>>> svc.predict(data[-10:])

array([5, 4, 8, 8, 4, 9, 0, 8, 9, 8])

>>> labels[-10:] # The actual labels

array([5, 4, 8, 8, 4, 9, 0, 8, 9, 8])

To find out more, try the scikit-learn tutorials.

1.3.3. Finding help¶

| Reference material: | |

|---|---|

|

|

| Mailing lists and forums: | |

|

|